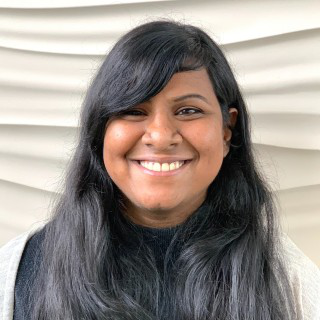

Abhilasha Ravichander

/ɑ.bʰi.ˈla.ʃə/

Update: I will be joining the Max Planck Institute for Software Systems as an Assistant Professor starting Fall 2025! I will be recruiting Ph.D. students and interns. Please see this page for more details. I am a postdoctoral scholar at the Paul G. Allen Center for Computer Science and Engineering at the University of Washington, where I work with Yejin Choi. I received my PhD from Carnegie Mellon University in 2022.